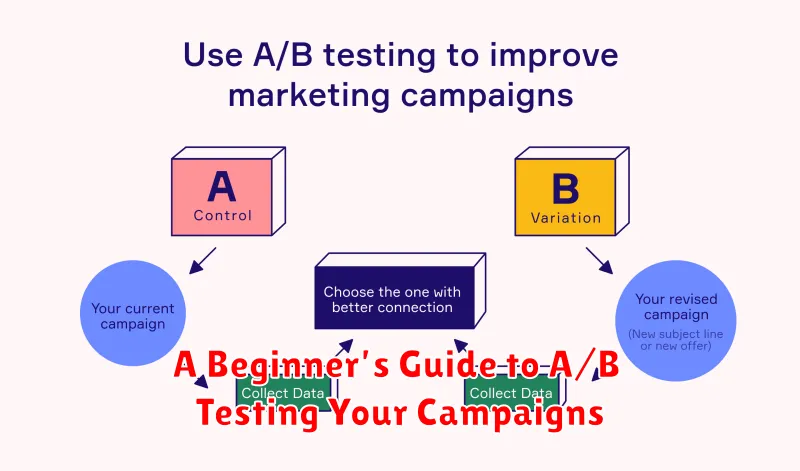

A/B testing, also known as split testing, is a crucial component of successful marketing campaigns. It allows you to compare two versions of a campaign element, such as an email subject line, a call-to-action button, or even entire landing pages, to determine which performs better. By understanding how different variations impact key metrics like conversion rates, click-through rates, and engagement, you can optimize your campaigns for maximum effectiveness. This beginner’s guide will provide a comprehensive overview of A/B testing, equipping you with the knowledge and tools necessary to enhance your marketing efforts and achieve your desired results.

This guide will delve into the essential steps of conducting A/B tests, from formulating a hypothesis and defining your target audience to analyzing the results and implementing the winning variation. Whether you are new to marketing or seeking to refine your existing strategies, this guide will provide valuable insights into the world of A/B testing and empower you to make data-driven decisions that significantly improve your campaign performance. Learn to harness the power of A/B testing and unlock the true potential of your marketing campaigns.

What Is A/B Testing and Why Use It?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage, email, or other marketing asset to determine which performs better. This is done by randomly showing visitors one of the two versions (A or B) and tracking key metrics like conversion rates, click-through rates, or bounce rates. The version that performs better based on the chosen metric is considered the winner and is typically implemented permanently.

The primary reason for conducting A/B testing is to make data-driven decisions about optimizing content and user experience. Instead of relying on guesswork or intuition, A/B testing provides empirical evidence to support changes. This leads to increased conversion rates, improved engagement, and ultimately, a better return on investment (ROI). Testing even seemingly small changes, like the color of a button or the wording of a headline, can have a significant impact on performance.

Several factors can be tested using this methodology, including but not limited to: headlines, call-to-action buttons, images, layouts, form fields, and even pricing. By systematically testing different variations, businesses can gain valuable insights into user behavior and preferences, ultimately leading to more effective marketing campaigns and a better overall user experience.

Deciding What to Test

Testing everything within a software application is often impractical due to time and resource constraints. Therefore, a strategic approach to testing is crucial. Prioritize testing areas with the highest risk, greatest impact on users, and those with complex logic or integrations. Consider areas where changes have been recently implemented, or those with a history of bugs. A well-defined testing scope ensures efficient use of resources and maximizes the chances of identifying critical defects.

Several factors contribute to deciding what to test. Business requirements are paramount; ensuring that the software meets core functional needs should be the primary focus. Technical feasibility is also important, as certain aspects might be more challenging or impossible to test thoroughly given the available tools and environment. Regulations and compliance must be considered, particularly for industries with stringent requirements. Finally, understanding user behavior can help pinpoint areas of the application that receive the most use and therefore require more rigorous testing.

Effective test planning involves a careful balance of these factors. Prioritization should be based on a combination of risk assessment, business impact, and technical constraints. A transparent and well-documented testing strategy ensures that all stakeholders understand the testing scope, limitations, and expected outcomes.

Setting Up Hypotheses and Control Groups

A hypothesis is a testable statement predicting the relationship between two or more variables. It’s a crucial starting point for any scientific investigation. A good hypothesis is specific, measurable, achievable, relevant, and time-bound (SMART). For example, instead of stating “fertilizer helps plants grow,” a stronger hypothesis would be “Applying 10ml of nitrogen-rich fertilizer weekly will increase the growth rate of tomato plants by 15% over a period of eight weeks compared to plants receiving no fertilizer.” This provides a clear, quantifiable prediction that can be directly tested.

Control groups are essential for validating the impact of the independent variable, which is the factor being manipulated in the experiment (e.g., the fertilizer). The control group provides a baseline for comparison by remaining unaffected by the independent variable. In the fertilizer example, the control group would consist of tomato plants receiving no fertilizer. By comparing the growth rate of the fertilized plants (experimental group) to the control group, researchers can determine whether the fertilizer truly influenced growth or if other factors were responsible.

Careful design of both the hypothesis and the control group are fundamental to drawing reliable conclusions from an experiment. The control group isolates the impact of the independent variable, while a well-formulated hypothesis provides a clear framework for interpreting the results and determining whether the experimental data support or refute the predicted relationship.

Running Tests in Email and Ads

Testing is crucial for optimizing both email marketing campaigns and online advertising. A/B testing allows you to compare different versions of your emails or ads to determine which performs better. For emails, this could include variations in subject lines, calls to action, email copy, or sending times. For ads, variations might involve different headlines, images, ad copy, targeting parameters, or bidding strategies. By analyzing the results of these tests, you can identify the elements that resonate most effectively with your audience, leading to increased open rates, click-through rates, and conversions.

Key metrics to track during testing depend on your campaign goals. For email, you might monitor open rates, click-through rates, conversion rates, and unsubscribe rates. For ads, you might focus on click-through rates, conversion rates, cost per click (CPC), and return on ad spend (ROAS). It’s important to test one element at a time to isolate the impact of each change. This systematic approach ensures accurate results and provides clear insights into what drives performance.

Implementing a robust testing strategy helps ensure that your marketing efforts are data-driven and continually improving. Regularly testing and refining your emails and ads maximizes your reach and impact, ultimately leading to greater success in achieving your marketing objectives.

Tools for A/B Testing Campaigns

A/B testing is crucial for optimizing marketing campaigns. Several tools simplify this process, allowing marketers to test different versions of emails, landing pages, and ad creatives. Using these tools can lead to significant improvements in conversion rates, click-through rates, and overall campaign performance. Choosing the right tool depends on the specific needs of your campaign and the level of sophistication required.

Popular A/B testing tools offer a range of features, from basic A/B testing functionality to advanced features like multivariate testing and personalization. Some popular options include Optimizely, VWO, and Google Optimize. These tools typically provide an intuitive interface for creating variations, managing traffic allocation, and analyzing results. Key features to consider include ease of use, integration with existing marketing platforms, and robust reporting capabilities.

When selecting a tool, assess your budget and technical expertise. Some platforms offer free plans with limited features, while others require a paid subscription for more advanced functionalities. Consider the level of support provided, including documentation and customer service. Ultimately, the right A/B testing tool empowers marketers to make data-driven decisions and maximize their campaign ROI.

How Long Should a Test Run?

The ideal test duration depends on several factors, including the complexity of the software, the scope of the test, and the risk associated with potential failures. A quick smoke test might only take a few minutes, while a comprehensive regression suite could run for several hours or even days. It’s crucial to find a balance between thoroughness and practicality. Prioritize tests covering critical functionalities and high-risk areas.

Consider breaking down longer tests into smaller, more manageable units. This approach helps isolate issues faster and makes debugging more efficient. For automated tests, aim for shorter runs that provide quick feedback during the development process. Longer, more exhaustive tests can be reserved for less frequent integration or release cycles. Regular evaluation of test duration and effectiveness is crucial for optimizing the testing process.

Ultimately, the appropriate test run time is a strategic decision based on your project’s specific needs and constraints. There is no one-size-fits-all answer. Focus on maximizing test coverage for the most critical aspects of your software while keeping the overall test duration within reasonable limits. Effective time management in testing helps ensure a high-quality product without unnecessarily delaying the development cycle.

Interpreting the Results Correctly

Accurate interpretation of results is crucial for drawing meaningful conclusions from any experiment or study. This involves carefully considering the context of the research, including the study design, data collection methods, and potential limitations. Comparing the findings to existing research and established theories can provide valuable insights. It’s also essential to acknowledge any uncertainties or limitations in the data, avoiding overgeneralization or misrepresentation of the findings.

Statistical significance plays a key role in result interpretation. A statistically significant result indicates that the observed effect is unlikely due to chance alone. However, statistical significance does not necessarily imply practical significance or real-world importance. The magnitude of the effect, as well as its relevance to the research question, should be carefully considered. Additionally, potential confounding factors, which are variables that could influence both the independent and dependent variables, must be taken into account.

Finally, transparency and objectivity are essential for credible interpretation. Clearly stating the methods used and any assumptions made allows others to evaluate the validity of the conclusions. Avoiding bias in interpretation is vital, even when the results may not align with initial hypotheses. Presenting the data in a clear and concise manner, using appropriate visualizations when helpful, allows for easier understanding and facilitates accurate interpretation by others.

Common Testing Mistakes to Avoid

Testing is a crucial part of the software development lifecycle. However, if not done correctly, it can lead to wasted time and undetected bugs. One common mistake is insufficient testing. Teams often focus solely on happy path scenarios and neglect edge cases, boundary conditions, and negative testing. This can result in critical vulnerabilities making their way into production. Another frequent oversight is the lack of clear testing objectives. Without clearly defined goals and metrics, testing becomes aimless and ineffective. A well-defined test plan should outline the scope, strategy, and expected outcomes of the testing process.

Another common pitfall is inadequate test data management. Using unrealistic or outdated data can lead to inaccurate test results and a false sense of security. Test data should be representative of real-world scenarios and regularly updated. Furthermore, poor communication within the testing team and between testers and developers can hinder the identification and resolution of issues. Open communication channels and efficient feedback loops are essential for effective collaboration.

Finally, automating the wrong things can be a costly mistake. While test automation is vital for efficiency, it should be applied strategically. Automating repetitive tasks, regression tests, and complex scenarios provides the most significant return on investment. Focusing automation efforts on areas prone to frequent changes or requiring human judgment is less efficient. By avoiding these common testing mistakes and embracing best practices, teams can significantly improve software quality and deliver a better end-user experience.

Scaling What Works

Scaling successful initiatives requires a strategic approach. It’s not simply about replicating what worked on a small scale, but rather understanding the core elements that contributed to success and adapting them for a larger context. This often involves carefully evaluating resources, processes, and potential bottlenecks, and developing plans to address them proactively.

Effective scaling also necessitates clear communication and buy-in from all stakeholders. Teams need to understand the overall goals, their individual roles, and how their contributions fit into the bigger picture. Regular monitoring and evaluation are crucial to track progress, identify challenges, and make adjustments as needed. This iterative process allows for flexibility and continuous improvement throughout the scaling journey.

Key considerations for scaling include: sustainability, ensuring the scaled initiative can be maintained long-term; impact measurement, defining and tracking key metrics to demonstrate the effectiveness of the scaled initiative; and adaptability, maintaining the ability to adjust to changing circumstances and feedback.

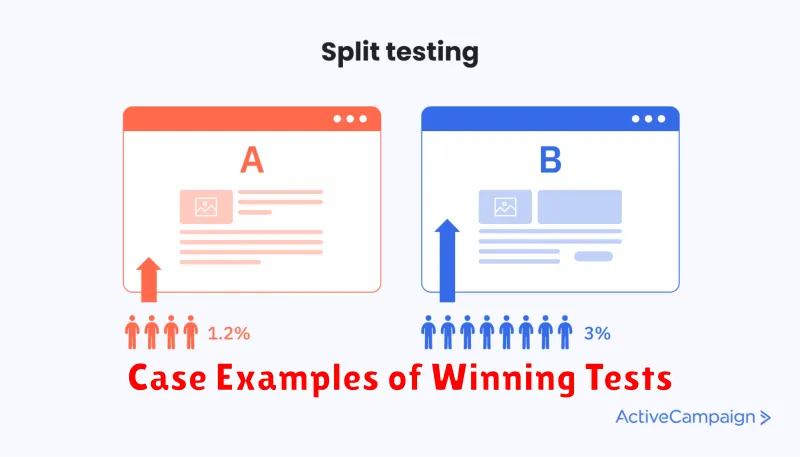

Case Examples of Winning Tests

A/B testing allows businesses to make data-driven decisions, optimizing experiences for better outcomes. One key example involves an e-commerce company testing different call-to-action button colors. The original red button was tested against a blue variant. Results showed a statistically significant increase in click-through rate with the blue button, leading to higher sales conversions and demonstrating the impact of seemingly small changes.

Another compelling example involves a SaaS company optimizing its landing page. The original version featured a long, text-heavy explanation of their product. They A/B tested a shorter, more visual version with a clear value proposition and concise benefits. This new version significantly increased lead generation, proving the importance of clarity and conciseness in communicating value to potential customers. The simpler design reduced bounce rates and improved user engagement.

Finally, a non-profit organization tested different email subject lines for their fundraising campaigns. They compared a generic subject line with a more personalized and urgent one. The personalized and urgent subject line resulted in a substantial increase in open rates and donations, highlighting the power of targeted messaging and emotional connection in driving desired actions.